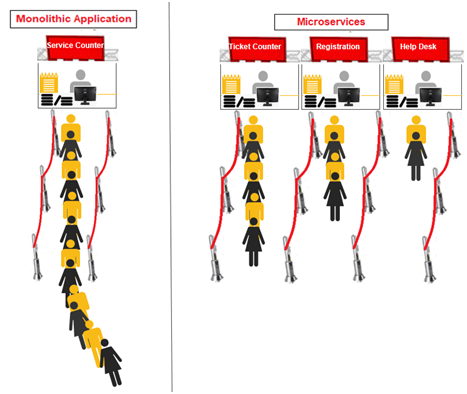

Most organisations have moved to adopt smarter ways to create, deploy and manage applications rather than sticking to the traditional monolithic approach of building a big bulky application that is too hard to manage or get hands-on in order to extend them. A monolith is just like having only one counter for buying tickets, managing new and existing registrations, dealing with support, and so the list can continue.

A system like this spells disaster in terms of business operation, time, money, and most importantly delivers poor customer support.

This blog explores the benefits of having Microservice to isolate and separately deal with various functional sections/departments.

What is a Microservice?

A Microservice is the end product resulting from the Microservice Architecture where each service is complete and fully functional having their own data models and encompasses only a single business scope. These are loosely coupled and can be accessed by other services, programs, or applications.

Advantages of Using Microservices

- Each service can be developed, tested and deployed individually by different teams and is technology neutral.

- They are robust and bring in the concept of high availability, as a single service is dedicated to handling a single task.

- Each service can be scaled up or down independently depending on requirement.

- Microservices offer better fault tolerance as the failure of one service does not affect the other services from running.

Disadvantages of Using Microservices

Despite the huge benefits of using Microservices, they do come with some challenges:

- Debugging erroneous services can be time-consuming for large organisations the have hundreds or more Microservices running. This is due to the need to manually debug and analyse the logs of each service.

- Service Deployment is another bottleneck as teams need to have a good knowledge of other services or external references being made by the candidate.

- Network Latencies can be expected over remote calls

- More services mean more Resource utilisation

- Testing each service is cumbersome depending on the number of services/components needed to test.

- The requirement to create and manage reports against each service to analyse transaction flows, errors and downtimes.

- Incomplete and inconsistent view of network observability.

To address the limitations when using a Microservice, came the concept of Service Mesh.

What is a Service Mesh?

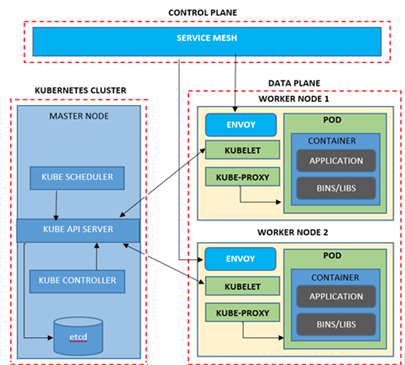

A Service Mesh acts as a layer between the services and the network, meaning a request to any API will first be governed by the Service Mesh. The K8 cluster environment can be divided into two: Control Plane and Data Plane. The below diagram explains the Service Mesh architecture to the very detailed operational aspect. In this approach, the applications communicate via the Envoys also known as Sidecar/Proxies adding additional security and ease of service management. These proxies act as load balancers, routing the requests to respected services on different nodes of a cluster. They come with the advantage of reduced operational complexity and transparent service communication. These are not recommended to rapidly evolving applications.

Benefits of Service Mesh

Service Mesh can make the work of both developers and the DevOps team a lot easier by using various dashboards for visualisations that can be customised for tracing and managing various features, such as security policies, authentication, etc. The main benefits of a Service Mesh are:

- Runtime Monitoring of applications can be done with the use of custom dashboards. These provide an insight into the overall health of the deployed application and service, which can be further viewed in detail based on response time, delays, failure, workload distribution, etc. Kiali and Grafana are commonly used visualisation tools.

- Security is guaranteed by configuring traffic encryption, authentication, and authorisation on all service communication and enforcing security policies. The Envoys in Service Mesh by default block direct communication between services.

- Circuit Breakers help to manage the incoming service requests gracefully by defining limits and thresholds and also throttling logic can be applied to reject any further requests exceeding the max threshold. This increases the fault tolerance and resilience of the system.

- Polyglot problems are fixed with Service Mesh as it supports applications written in any programming language. Can also be termed as heterogeneous deployment of services.

- The Telemetry feature for most of the Service Mesh tools works on providing metrics data based on- latency, traffic, errors, and saturation, which aid in the observability of network traffic. Prometheus is a tool used for obtaining telemetry metrics.

- Canary Releases, meaning controlled deployments are possible with traffic control.

- Service discovery to map the proxies correctly to the dynamically changing IPs to services deployed on various cluster nodes.

Though Service Mesh offers a lot of flexibility and benefits in terms of deploying, managing, and monitoring microservices, they come with a few disadvantages:

- Most of the Service Mesh implementations are not fully production-ready. This is because of the technical immaturity faced because the technology is still evolving.

- The initial setup requires a high investment in build and tooling.

Popular Service Mesh Tools for Kubernetes

MuleSoft Anypoint Service Mesh

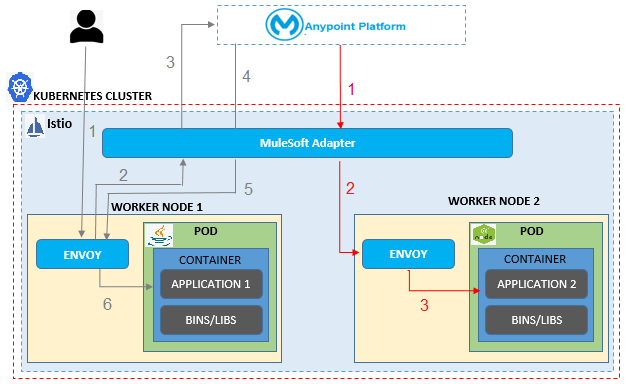

The Anypoint Service Mesh can otherwise be called an API management Tool for managing MuleSoft applications and non-MuleSoft applications deployed on the Kubernetes cluster. This is achieved by installing the Mule layer (Mule Adapter) inside the Istio service mesh in your Kubernetes cluster.

The above architectural diagram shows how communication happens when an Anypoint Service Mesh (MuleSoft Adapter) is installed in Kubernetes. Any external calls travel through the Envoy and are redirected to the MuleSoft Adapter for validating the policies and then are passed back to the microservices through the Envoy. By doing so the security policies and features in both Kubernetes and Anypoint Manager can be applied combinedly to all the microservices. The policies such as– Rate Limiting, Rate Limiting SLA, JWT, and Client ID enforcement can be applied for handling east-west traffic. Any data transformation and payload based policies are more suitable for central API Gateways.

The key aspects of the Anypoint Service Mesh are:

- All the Non-Mule APIs running inside the K8 can be made discoverable inside the Anypoint platform and can be accessed and managed from Exchange, API Designer, and API Manager.

- The capabilities of API manager such as policies, security enforcements, and analytics can be applied to non-Mule services.

- Maximise the reusability of non-Mule applications in MuleSoft, i.e. supports polyglot.

Conclusion

With the rapidly evolving cloud space, the advancement of technology and digitisation, more organisations are adopting Microservice architecture for creating single scope oriented services called Microservices.

This leads on to embracing containerisation tools such as Docker and Kubernetes to orchestrate the Microservices. The increasing count of Microservices led to the need for a technology that could secure, route, and manage within the fast growing network.

The use of Service Mesh meets this need and also offers great visualisation of traffic, failures, and log metrics using different dashboard tools.

With the exception of organisations only using a handful of Microservices and evolving applications, for a large number of companies, using Anypoint Service Mesh means we can leverage the capabilities of non-MuleSoft Microservices that are outside the scope of MuleSoft.